I had the pleasure of speaking at the National Strength and Conditioning Association‘s (NSCA) National Conference this summer and while there I made it a point to attend the Sport Science & Performance Technology Special Interest Group meeting as well.

One thing that immediately stood out to me was the number of questions raised specific to what types of technologies to purchase (e.g. “Which brand of force plates should we buy?”, “Does anyone have a list comparing and contrasting different technologies so that we can determine what would be best for us?”, etc.).

While these are fine questions, I do feel they are a bit like putting the cart before the horse. Before thinking about what technology to purchase, we should spend a good bit of time gaining clarity on the question(s) we are attempting to answer. Once we have a firm understanding of the question we can then begin the process of evaluating whether a technology exists that can help us collect the necessary data to explore that question. In fact, this was the main crux of my lecture at the conference, as I spoke about using the PPDAC Framework in practice (I wrote an article about this framework a couple of years ago).

A force plate, a GPS unit, or an accelerometer won’t solve all of our problems. In fact, depending on our question, they might not solve any of our problems! Moreover, as sport scientists we need to concern ourselves not only with the research question but, also whether the desired technology is useful within our ecological setting. Just because something worked in a controlled lab environment or was valid in a different sport does not mean it will be useful for our sport, or in our setting, or with our athletes, or given our unique constraints.

So, I decided to share a few resources pertaining to measurement theory concepts such as validity, reliability, and responsiveness/sensitivity for those working in the sport science space who are interested in more critical approaches to evaluating the technology we use in practice.

- Impellizzeri & Marcora – Test Validation in Sport Physiology: Lessons Learned From Clinimetrics.

- Weir – Quantifying Test-Retest Reliability Using The Intraclass Correlation Coefficient and the SEM.

- Atkinson & Nevill – Statistical Methods For Assessing Measurement Error (Reliability) in Variables Relevant to Sports Medicine.

- Currell & Jeukendrup – Validity, Reliability and Sensitivity of Measures of Sporting Performance.

- Bland & Altman – Statistical methods for assessing agreement between two methods of clinical measurement.

- Swinton et al – A statistical framework to interpret individual response to intervention: Paving the way for personalized nutrition and exercise prescription.

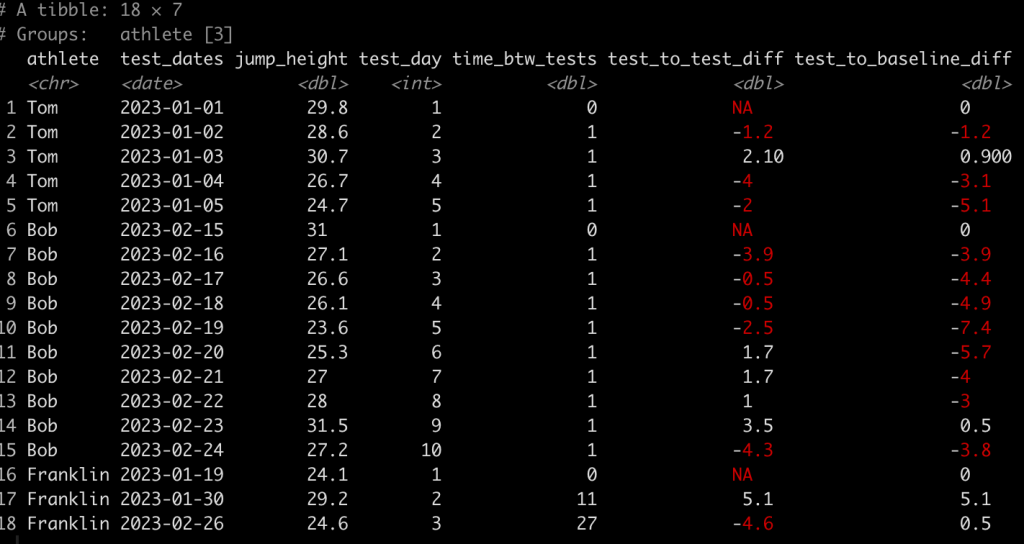

Additionally, for those interested, several years ago I wrote a full R code blog for the last paper above (Swinton et al) ,which can he accessed HERE.

Happy reading!