This week Ellis Hughes and I wrap up our Intro to Bayesian Analysis series. Up to this point we’ve been talking about conjugate priors for the binomial distribution, poisson distribution, and normal distribution.

Unfortunately, when using the normal-normal conjugate you need to assume that one of the two distribution parameters (mean or standard deviation) are known and then estimate the other parameter. For example, last episode we assumed the standard deviation was known, allowing us to estimate the mean. This is a problem in situations where you don’t always know what the standard deviation is and, therefore, need to estimate both parameters. For this, we turn to GIBBS sampling. A GIBBS sampler is a Markov Chain Monte Carlo (MCMC) approach to Bayesian inference.

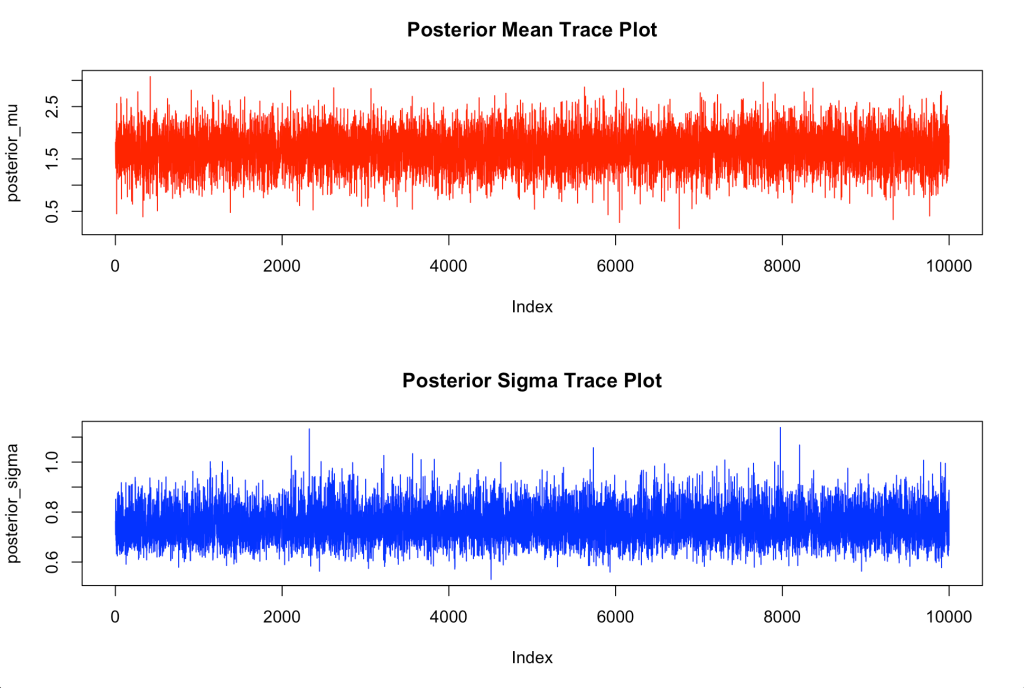

In this episode we will walk through building your own GIBBS sampler, calculating posterior summary statistics, and plotting the posterior samples and trace plots. We wrap up by showing how to use the normpostsim() function from Jim Albert’s {LearnBayes} package, for instances where you don’t want to code up your own GIBBS sampler.

To watch our screen cast, CLICK HERE.

To access the code, CLICK HERE.