A few weeks ago I was speaking with some athletic trainers and strength coaches who work for a university football team. They asked me the following question:

“We are about to start using GPS to collect data on our team. But we have never collected anything in the past. How do we even start to understand whether the training sessions we are doing are normal or not? Do we need to tell the coach that we have to collect data for a full season before we know anything meaningful?”

This is a fascinating question and it is an issue that we all face at some point in the applied setting. Whenever we start with a new data collection method or a new technology it can be daunting to think about how many observations we need in order to start making sense of our data and establishing what is “normal”.

We always have some knowledge!

My initial reaction to the question was, “Why do you believe that you have NOTHING to make a decision on?”

Sure, you currently have no data on your specific team, but that doesn’t mean that you have no prior knowledge or expectations! This is where Bayes can help us out. We can begin collecting data on day 1, combine it with our prior knowledge, and continually update our knowledge until we get to a point where we have enough data on our own team that we no longer need the prior.

Where does our prior knowledge come from?

Establishing the prior in this case can be done in two ways:

- Get some video of practices, sit there and watch a few players in each position group and record, to the best you can estimate, the amount of distance they covered for each rep they perform in each training drill.

- Pull some of the prior research on college football and try and make a logical estimation of what you’d assume a college football practice to be with respect to various training metrics (total distance, sprints, high speed running, accelerations, etc).

Option 1 is a little time consuming (though you probably wont need to do as many practices as you think) and probably not the option most people want to hear (Side Note, I’ve done this before and, yes, it does take some time but you learn a good deal about practice by manually notating it. When trying to do total distance always remember that if a WR runs a route they have to always run back to the line of scrimmage once the play is over, so factor that into the distance covered in practice).

Option 2 is reasonably simple. Off the top of my head, the two papers that could be useful here are from DeMartini et al (2011) and Wellman et al (2016). The former quantifies training demands in collegiate football practices while the latter is specific to the quantification of competitive demands during games. To keep things brief for the purposes of this blog post, I’ll stick to total distance. I’ve summarized the findings from these papers in the table below.

Notice that the DeMartini paper uses a broader position classification — Linemen or Non-Linemen. As such, it is important to consider that the mean’s and standard deviations might be influenced by the different ergonomic demands of the groups that have been pooled together. Also, DeMartini’s paper is of practice demands, so the overall total distance may differ compared to what we would see in games, which is what Wellman’s data is showing us. All that aside, we can still use this information to get a general sense for a prior.

Let’s bin the players into groups that compete against each other and therefore share some level of physical attributes.

Rather than getting overly complicated with Markov Chain Monte Carlo, will use normal-normal conjugate (which we discussed in TidyX 102). This approach provides us a with simple shortcut for performing Bayesian inference when dealing with data coming from a normal distribution. To make this approach work, we need three pieces of prior information from our data:

- A prior mean (prior mu)

- A prior standard deviation for the mean (sigma) which we will convert to precision (1 / sigma^2)

- An assumed/known standard deviation for the data

The first two are rather easy to wrap our heads around. We need to establish a reasonable prior estimate for the average total distance and some measure of variability around that mean. The third piece of information is the standard deviation of the data and we need to assume that it is known and fixed.

We are dealing with a Normal distribution, which is a two parameter distribution, possessing a Mean and Standard Deviation. Both of these parameters have variability around them (they have their own measures of center and dispersion). The Mean is what we are trying to figure out for our team, so we set a prior center (mu) and dispersion (sigma) around it. Because we are stating up front that the Standard Deviation for the population is known, we are not concerned with the dispersion around that variable (if we don’t want to make this assumption we will need to resort to an approach that allows us to determine both of these parameters, such as GIBBS sampling).

Setting Priors

Let’s stick with the Skill Positions for the rest of this article. We can take an average of the WR, DB, and RB distances to get a prior mean. The dispersion around this mean is tricky and Wellman’s paper only tells us the total number of athletes in their sample, not the number of athletes per position. From the table above we see that the WR group has a standard deviation of 996. We will make the assumption that there were 5 WR’s that were tracked and thus the standard error of the mean (the dispersion around the mean) ends up being 996 / sqrt(5) = 445. Since we also have DB’s and RB’s in our skill grouping lets just round that up to 500. Finally, just eyeballing the standard deviations in the table above, I set the known SD for the population of skill positions to be 750. My priors for all three of our position groups are as follows:

Bayesian Updating

Looking at the Skill Positions, what we want to do is observe each training session for our team and update our prior knowledge about the normal amount of total running distance we expect skill position players to do given what we know.

First, let’s specify our priors and then create a table of 10 training sessions that we’ve collected on our team. I’ve also created a column that provides a running/cumulative total distance for all of the sessions as we will need this for our normal-normal conjugate equation.

library(tidyverse)

## set a prior for the mean

mu_prior <- 4455

mu_sd <- 500

tau_prior <- 1/mu_sd^2

## To use the normal-normal conjugate we will make an assumption that the standard deviation is "known"

assumed_sd <- 750

assumed_tau <- 1 / assumed_sd^2

## Create a data frame of observations

df <- data.frame(

training_day = 1:10,

dist = c(3800, 3250, 3900, 3883, 3650, 3132, 3300, 3705, 3121, 3500)

)

## create a running/cumulative sum of the outcome of interest

df <- df %>%

mutate(total_dist = cumsum(dist))

df

We discussed the equation for updating our prior mean in TidyX 102. We will convert the standard deviations to precision (1/sd^2) for the equations below. The equation for updating our knowledge about the average running distance in practice for our skill players is as follows:

Because we want to do this in-line, we will want to update our knowledge about our team’s training after every training sessions. As such, the mu_prior and tau_prior will be updated with the row above them and session 1 will be updated with the initial priors. To make this work, we will program a for() loop in R which will update our priors after each new observation.

First, we create a few vectors to store our values. NOTE: The vectors need to be 1 row longer than the number of observations we have in the data set since we will be starting with priors before observing any data.

## Create a vector to store results from the normal-normal conjugate model

N <- length(df$dist) + 1

mu <- c(mu_prior, rep(NA, N - 1))

tau <- c(tau_prior, rep(NA, N - 1))

SD <- c(assumed_sd, rep(NA, N - 1))

Next, we are ready to run our for() loop and then put the output after each observation into the original data set (NOTE: remember to remove the first element of each output vector since it just contains our priors, before observing any data).

## For loop to continuously update the prior with every new observation

for(i in 2:N){

## Set up vectors for the variance, denominator, and newly observed values

numerator <- tau[i - 1] * mu[i - 1] + assumed_tau * df$total_dist[i - 1]

denominator <- tau[i - 1] + df$training_day[i - 1] * assumed_tau

mu[i] <- numerator / denominator

tau[i] <- denominator

SD[i] <- sqrt(1 / denominator)

}

df$mu_posterior <- round(mu[-1], 0)

df$SD_posterior <- round(SD[-1], 0)

df$tau_posterior <- tau[-1]

df

The final row in our data set represents the most up to date knowledge we have about our skill players average total running distance (mu_posterior = 3620 ± 99 yards) at practice. We can compare these results to summary statistics produced on the ten rows of our distance data:

### look at the summary stats after 10 sessions

mean(df$dist) # Mean

sd(df$dist) / sqrt(10) # Standard Error of the Mean

sd(df$dist) # Standard Deviation

The posterior mean (mu_posterior) and posterior SD of the mean (SD_posterior) are relatively similar to what we have observed for our skill players after 10 training sessions (3524 with a standard error of 96). Our assumed SD was rather large to begin with (750) but the standard deviation for our skill players over the 10 observed sessions is much lower (305).

We’ve effectively started with prior knowledge of how much average total distance per training session we expect our skill players to perform and updated that knowledge, after each session, to learn as we go rather than waiting for enough data to begin having discussions with coaches.

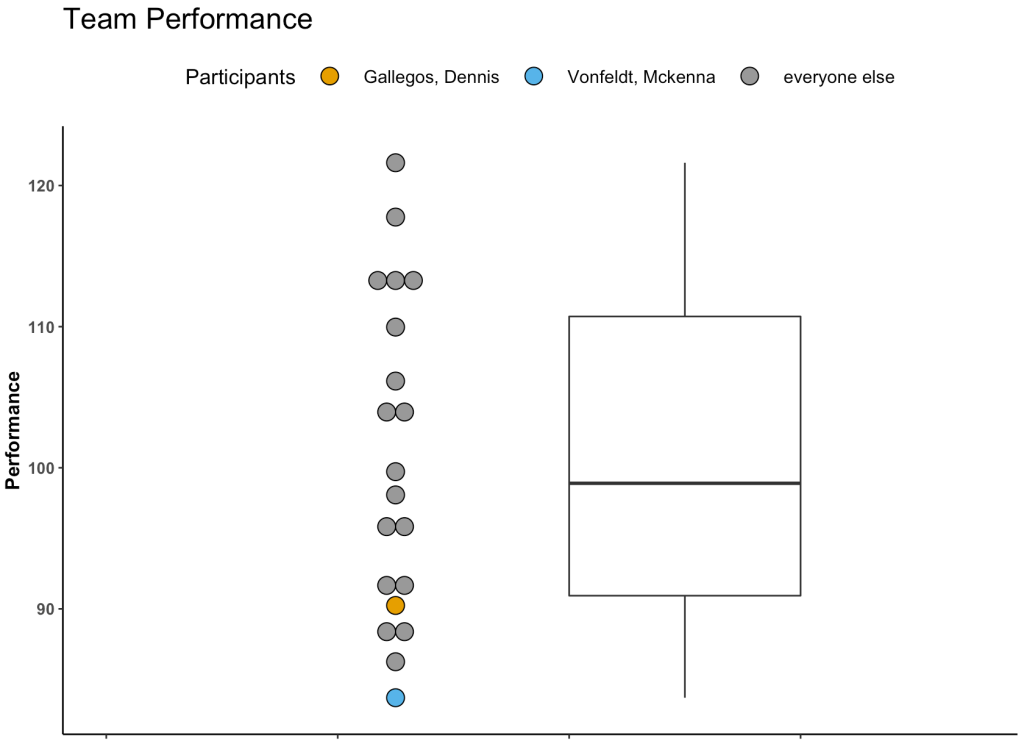

Plot the data

Finally, let’s make a plot of the data to see what it looks like.

The grey shaded region shows the 95% confidence intervals around the posterior mean (red line) which are being updated after each training session. Notice that after about 8 sessions the data has nearly converged to something that is bespoke to our team’s skill players. The dashed line represents the average of our skill players’ total distance after 10 sessions. Note that we would not be able to compute this line until after the 10 sessions (for a team that practices 3 times a week, that would take 3 weeks!). Also note that taking a rolling average over such a short time period (e.g., a rolling average of every 3 or 4 sessions) wouldn’t have produced the amount of learning that we were able to obtain with the Bayesian updating approach.

Wrapping Up

After the first 3 sessions we’d be able to inform the coach that our skill players are performing less total running distance than what we initially believed skill players in college football would do, based on prior research. This is neither good nor bad — it just is. It may be more a reflection of the style of practice or the schematics that our coach employs compared to those of the teams that the original research is calculated on.

After about 6 sessions we are able to get a clearer picture of the running demands of our skill players and help the coach make a more informed decision about the total distance being performed by our skill players and hopefully assist with practice planning and weekly periodization. After about 9 or 10 sessions the Bayesian updating approach has pretty much converged with the nuances of our own team and we can begin to use our own data to make informed decisions.

Most importantly, we were able to update our knowledge about the running demands of our skill players, in real time, without waiting several weeks to figure out what training looks like for our team.

How much less running are our skill players doing compared to those of the players reported in the study?

This is a logical next question a coach might ask. For this we’d have to use a different type of Bayesian approach to compare what we are observing to our prior parameters and then estimate the magnitude of the difference. We will save this one for another blog post, though.

Finally, this Bayesian updating approach is not only useful when just starting to collect new data on your team. You can use priors from this season at the start of training camp next season to compare work rates to what you’d believe to be normal for your own team. You can also use this approach for the start of new training phases or for return to play, when a player begins a running program. Any time you start collecting new data on new people there is an opportunity to start out with your prior knowledge and beliefs and update as you go along. You always have some knowledge — usually more than you think!

All of the code for this article is available on my GITHUB page.